- Why Us?

- Features

White Label

For SaaS Platforms & Agencies

Provide our complete analytics suite to your clients, directly within your own interface and with your/their own branding. Discover Analytics-as-a-Service and White Label Analytics. Great benefit, minimal effort.

- Pricing

- White Label

- Success Stories

- ResourcesGetting Started

CONTENTS

- Overview: What is ChatGPT?

- The Purpose of ChatGPT

- Shortcomings of ChatGPT

- ChatGPT and Data Privacy: Framing the Question

- Conflict Between GDPR and Artificial Intelligence

- ChatGPT’s Data Collection Practices

- ChatGPT and GDPR Compliance

- Why ChatGPT Doesn't Meet GDPR Requirements

- OpenAI’s Data Breach

- ChatGPT and Data Privacy: Problems in Italy

- OpenAI’s Wider Issues Across the EU

- Deeper Concerns About AI Chat Tools

- ChatGPT’s Response to Data Privacy Concerns

- Privacy-first Technology: the Future for Data-secure Generative AI

- Emerging Privacy-First Innovations

- FAQs

- What is ChatGPT?

- Does ChatGPT collect personal data?

- Is ChatGPT GDPR compliant?

- Is data in ChatGPT secure?

ChatGPT and Data Privacy: Is the OpenAI Tool Secure?

The close connection between ChatGPT and data privacy is pretty evident for anyone who takes an interest.

The OpenAI language tool has exploded into public consciousness in the last year. It’s now hugely popular and not just amongst students late with an assignment.

Today, many businesses are leveraging it to answer questions, create effective marketing strategies, and to also create all manner of short-form and long-form content. AI chat is also revolutionizing the tech industry, and will no doubt be key to the future of digital marketing as a result.

But what happens to the inputted data, and is ChatGPT GDPR-compliant?

This article will explore OpenAI’s fascinating plaything. It will investigate the relationship between ChatGPT, GDPR, and data privacy, and also shine a light on the various issues that feed into this.

Let’s enter the chat!

Overview: What is ChatGPT?

ChatGPT is an AI-powered conversational model created by OpenAI.

It belongs to the GPT (Generative Pre-trained Transformer) family. This technology uses advanced techniques from deep learning to generate text that closely resembles human language.

News about ChatGPT-4’s capabilities spread quickly. Within days of its launch, more than a million users registered with the platform.

This accomplishment marks it as the first AI-powered language tool adopted by the public on such a large scale. Currently, it holds the record as the fastest-growing consumer application in history.

It's definitely a sign of things to come, providing more evidence about how AI will change the future of digital marketing and beyond.

The Purpose of ChatGPT

ChatGPT's main focus is on generating conversational replies, making it ideal for interactive applications, chatbot systems, and conversational marketing. It has been trained on a diverse range of internet text, which helps it understand and respond to a wide array of topics and questions.

One noteworthy feature of ChatGPT is its ability to produce text that is contextually relevant and coherent, creating conversational experiences that resemble human-like interactions.

For businesses, it can be used for anything from email marketing to social media marketing and even for developing wider marketing strategies.

However, it's important to remember that ChatGPT relies on patterns learned from training data and lacks genuine understanding or consciousness.

OpenAI has released different versions of the GPT model, with each iteration aiming to improve upon the limitations of its predecessors. Users can try out ChatGPT-4 for now. However, since OpenAI hasn't begun the training for the next version, it will be a long time before we see ChatGPT-5.

Shortcomings of ChatGPT

The different generations have demonstrated impressive growth in language capabilities. However, they also raise concerns about inaccurate information generation, confusing copyright issues, and shady data privacy practices - more on that later.

Ultimately, ChatGPT is a long way off from being a worthy replacement for a good writer. And while many companies are attempting to publish hundreds of AI-generated articles as a way to rig the system and drive SEO upwards, this strategy seems short-term as Google’s web crawler will soon cotton on to this tactic and punish offending pages accordingly.

ChatGPT and Data Privacy: Framing the Question

Understanding the relationship between ChatGPT and data privacy requires that we examine the interplay between user interactions and the processing of information they provide.

When we engage in conversations with ChatGPT, we willingly provide insights into our lives and personal experiences.

However, what happens to this data once it leaves our keyboards? Is it securely safeguarded, or does it become a tradable commodity that can be exploited without our explicit consent? This brings up a whole host of moral and legal issues, but we’ll stick to the legal ones here.

Conflict Between GDPR and Artificial Intelligence

Safeguarding data privacy often involves finding a balance between competing interests.

On one hand, we have the GDPR, a robust legal framework established to protect the privacy rights of EU citizens. It’s a stringent piece of personal data protection legislation, and GDPR has real implications for marketers.

On the other hand, AI has an insatiable appetite for vast amounts of data, occasionally crossing boundaries in its pursuit.

It uses natural language processing (NLP) and machine learning technologies - something you can learn more about in our Digital Futures Resource Hub. These innovations present a real challenge for GDPR and other data privacy laws, which are currently ill-equipped to regulate such technology.

And more broadly, AI poses a real risk to personal data.

Still, striking a balance between these opposing forces presents a monumental challenge for OpenAI.

ChatGPT’s Data Collection Practices

According to OpenAI’s privacy policy, ChatGPT gathers the following information:

- Account information entered when users sign up or pay for a premium plan

- Information that users type into the chatbot itself

- Identifying data from user devices and browsers

This means OpenAI will collect personal data from users, and it stores a large amount of it on their servers. OpenAI then uses the information to improve the system, and AI trainers may even review it to ensure compliance.

It remains unclear how much personal data is used by ChatGPT. However, it stands to reason that training this AI language tool requires massive amounts of data, and that they’re being tight-lipped about data volumes for this reason.

OpenAI claims that all data used for training is fully anonymized to remove all identifiable information, but it’s difficult to verify this with all certainty.

ChatGPT and GDPR Compliance

Regardless of what ChatGPT says publicly about the security of user data, there are simply too many questions to say that ChatGPT is GDPR-compliant.

Admittedly, its retention of data differs for free and paid users - meaning that you pay for privacy one way or another. Paid users enjoy extra benefits, like managing and also deleting personal data, which is crucial for GDPR compliance.

However, this is easier said than done.

Why ChatGPT Doesn't Meet GDPR Requirements

After users enter data into ChatGPT, the technology incorporates this data fully into the algorithm, which presents a challenge to the company when they need to remove personal data. Consequently, OpenAI faces increased difficulty in meeting data privacy demands.

Its physical data storage practices are also a concern for GDPR. ChatGPT operates out of the US and uses servers at a Microsoft Azure datacenter in Texas.

The location of ChatGPT's servers in the US, where it fails to meet EU standards for user data privacy and protection, violates GDPR requirements for data transfers outside the EU. It’s an area that has seen the brunt of GDPR enforcement activities, with Google’s legal issues centered around its own EU-US data transfers and the Schrems II decision.

Given all this, it’s fair to say that ChatGPT does not comply with GDPR, and wouldn't be able to complete the compliance checklist in our Marketer’s Guide to GDPR and Data Privacy.

Its data practices are not nearly transparent enough, and it’s very likely that the platform is handling user personal data unscrupulously. Users will also be finding it hard to exercise their rights under GDPR - including their right to be informed and the right to be forgotten.

OpenAI’s Data Breach

OpenAI is already facing its seven-year regulatory itch, and now a major data breach has further revealed weaknesses in ChatGPT's security system.

This has brought more bad press for the company, and it has suffered further consequences from data regulators.

The breach happened during a nine-hour window on March 20th, 2023 - between 10:00 and 19:00 Central European Time (CET).

According to OpenAI, approximately 1.2% of ChatGPT Plus subscribers had their data exposed. In effect, this means that up to 1.2 million users were potentially affected.

A flaw in ChatGPT’s source code caused the breach, leading to the leakage of sensitive user data.

Despite OpenAI's immediate and effective countermeasures, such as patching the vulnerability and reassuring its user base, the incident undeniably eroded a significant amount of trust placed in the AI system.

ChatGPT and Data Privacy: Problems in Italy

ChatGPT’s data breach certainly drew attention to data privacy concerns.

However, OpenAI was already on the radar of data protection agencies. It had a challenging 2023, being embroiled in a maelstrom of controversies related to GDPR.

This has focused on questions around the ethics and transparency of OpenAI's data collection practices. This included allegations that OpenAI used personal data without authorization, which sent shockwaves throughout the EU.

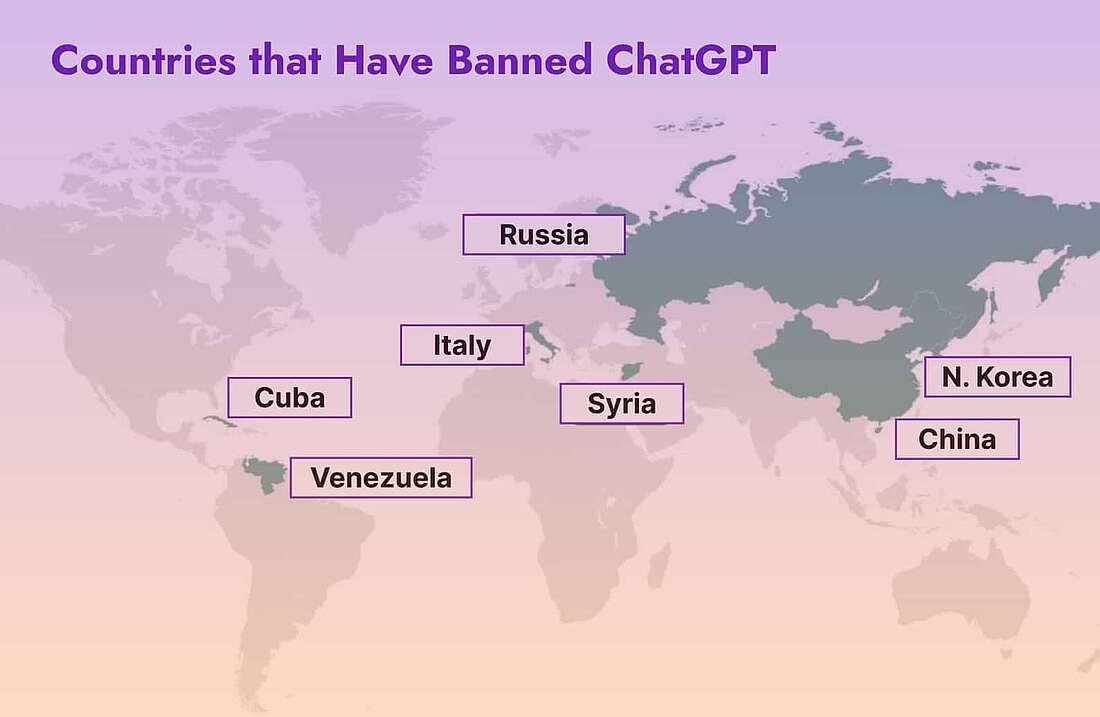

Italy was the first EU country to take action against OpenAI - though it is banned in a range of different locations (see map below).

On March 21st - not long after the data breach - its data protection authority (GPDP) issued an urgent temporary ruling. This urgent temporary ruling instructed the company to stop using the personal data of millions of Italians in its training protocols.

Garante - Italy’s data protection agency - argued that OpenAI lacked the legal authority to leverage this personal data.

ChatGPT’s tendency to generate false information was also cited as an issue. GDPR regulations state that all personal data has to be accurate, something that was highlighted in Garante’s press release.

The agency also accused the company of ignoring age-verification requirements, endangering minors, and it forced OpenAI to restrict Italian access to its chatbot.

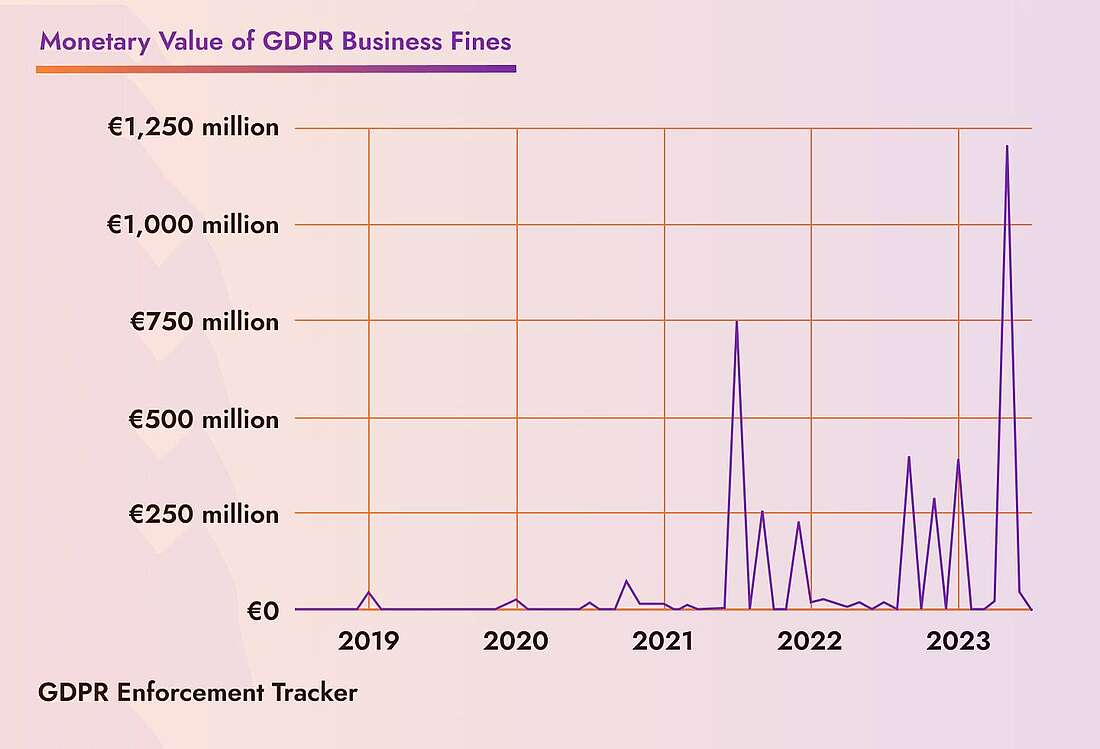

OpenAI’s Wider Issues Across the EU

ChatGPT’s regulatory troubles in Italy are just the beginning.

Competitors like Google’s Bard and Azure AI (itself also powered by OpenAI) will be paying close attention to their journey.

Since March, at least three EU nations - Germany, France, and also Spain - have started investigating ChatGPT. The UK is doing the same, and the European Data Protection Board (EDPB) has established a task force to coordinate these different investigations.

And as the strictest and most influential data privacy law in the world, their application of GDPR principles to OpenAI will have seismic consequences that will reach far outside Europe.

Non-EU countries are however also taking an interest, with Canada evaluating OpenAI’s data privacy practices under its Personal Data Protection and Electronics Documents Act (PIPEDA).

Deeper Concerns About AI Chat Tools

There are real worries about how AI tools like ChatGPT collect and generate information.

These include how platforms use data in their training data, political biases, and also their tendency to generate misinformation. These concerns are admittedly hardly new, and mirror the wider discourse about how the internet affects societies.

Misinformation is particularly worrisome for businesses that are using ChatGPT to create marketing content online. These chat tools learn much of their knowledge from the internet, and their proliferation of misinformation could well create a closed feedback loop of inaccurate information.

GDPR can only do so much, given that it wasn't designed to address AI directly. However, policymakers are currently drafting legislation that will address this technology directly. The EU’s Artificial Intelligence Act (AIA) - submitted back in 2021 - points the way.

This is a sign of things to come, suggesting that the golden age of relatively unregulated AI may soon disappear almost as quickly as it arrived.

ChatGPT’s Response to Data Privacy Concerns

Despite facing challenges, the narrative surrounding ChatGPT and data privacy is not all doom and gloom.

Amidst adversity, the AI tool displayed resilience and underwent improvements.

After successfully rectifying the alleged data protection issues, OpenAI managed to get the Garante DPA to remove the ban in Italy.

The company accomplished this by explaining that it would offer a tool for Italian users to verify their age on signup. It said it would also provide a new form for EU users that would enable them to exercise their right to object to its use of personal data to train its models - a key requirement under GDPR.

This development demonstrated OpenAI's commitment to data protection standards.

However, OpenAI still needs to do work. It has until September 30th, 2023, to create a more robust system for keeping minors under the age of 13 off ChatGPT. Likewise for older, underage teenagers without opt-in parental consent. Failure to initiate these elements will result in being barred in Italy again - if not further afield.

Privacy-first Technology: the Future for Data-secure Generative AI

Data privacy is a pressing concern in the age of AI.

And in effect, ChatGPT serves as a notable case study highlighting the challenges and opportunities in this realm.

Achieving a harmonious coexistence between AI advancements and data privacy requires a multifaceted approach. This approach will need to include robust regulations, user empowerment, and ongoing dialogue.

Technological advancement will also facilitate data privacy in the AI-powered future. OpenAccessGPT and Elastic are developing privacy-first technologies for AI chat and AI search, respectively.

Their innovation will prove all the more welcome as businesses look to capitalize on alternatives to ChatGPT in an ever-tightening regulatory environment.

Emerging Privacy-First Innovations

This fits into the current dynamic of internet technologies, where privacy-focused technology like TWIPLA is emerging to meet digital challenges.

Our website analytics platform enables businesses to track users anonymously thanks to an advanced cookieless model. Consequently, they can collect the data required to grow their website around their customers, all while keeping user data safe.

Privacy-focused technology is also becoming increasingly popular as businesses look to align their systems with customer expectations for data security.

So try out TWIPLA and see what our website analytics solution can do for your business.

FAQs

What is ChatGPT?

ChatGPT is an AI-powered conversational model created by OpenAI. It belongs to the GPT (Generative Pre-trained Transformer) family of models, which uses advanced deep learning techniques to generate text that closely resembles human language. ChatGPT's main focus is on generating conversational replies, making it suitable for interactive applications, chatbot systems, and wider conversational marketing. It has been trained on a diverse range of internet text, enabling it to understand and respond to a wide array of topics and questions.

Does ChatGPT collect personal data?

Yes, ChatGPT collects personal data. This includes users' IP addresses, browser types, and settings. It also collects data on users' interactions with the site. This includes prompts, the types of content they engage with, the features used, and actions taken by the users.

Is ChatGPT GDPR compliant?

No, ChatGPT is not GDPR compliant. This is evident from the Italian data protection authority's temporary ban on the chatbot in March 2023 due to privacy concerns. These concerns center on its use of personal data in its training protocols, and it was also banned for not restricting access to minors - a key element of GDPR compliance. Additionally, it stores data in the US, lacks transparency with its data collection practices, and it's unclear whether users can delete their data on request.

Is data in ChatGPT secure?

Yes, ChatGPT is generally considered to be secure. The platform uses data encryption to protect any inputted data, and they claim that the chatbot has strong safety measures. However, there is always the risk that data will be intercepted or hacked by a third party, and the data breach in March 2023 has raised concerns about its data security practices.

Share article

Get Started for Free

Gain World-Class Insights & Offer Innovative Privacy & Security

You might also like

Apps from Design to Success: 13 Wix Apps to Use in 2025 23 May 2023 - by Simon Coulthard

23 May 2023 - by Simon Coulthard

16 Actionable Content Marketing Tips for Guaranteed Results 23 May 2023 - by Simon Coulthard

23 May 2023 - by Simon Coulthard